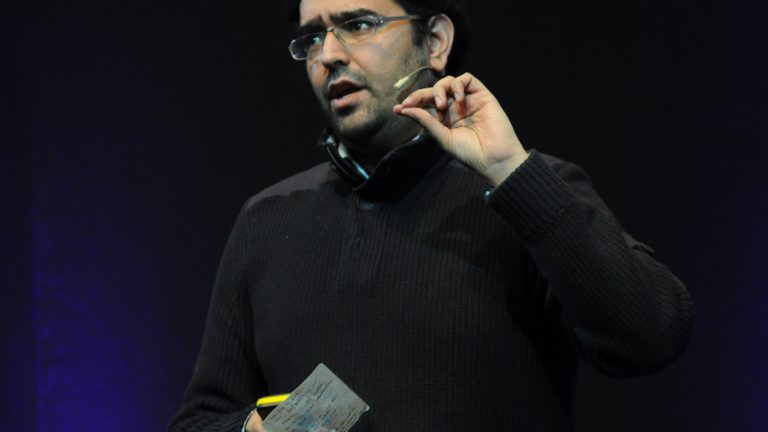

Azeem Azhar is a British writer, analyst, and entrepreneur whose Exponential View newsletter is required reading in technology circles. In his new book, The Exponential Age, he argues that technology, from biotech to renewable energy to social media, is moving too fast for our existing social structures to keep up with, with potentially profound consequences for human civilization. He spoke to Rest of World in London, shortly after Facebook was rocked by accusations from whistleblower Frances Haugen that despite its enormous global reach, the company devoted only a small fraction of its resources to non-English language moderation and oversight and that it knew its Instagram platform contributed to mental health issues in teenagers.

This, Azhar said, is an example of “exponentiality” in play: a company whose impact has drastically outgrown its roots as a platform for student interactions, to become a piece of transnational infrastructure with something close to monopoly power on information. A few hours after Azhar met with Rest of World, a glitch took Facebook and all of its properties, including Instagram and WhatsApp, offline for five hours. The outpouring of frustration, concern, schadenfreude, and joy showed, definitively, how deeply integrated the company is into economies and societies but also how fragile that dependence is. “So much monopoly,” Azhar said afterward, via text message.

The following interview has been edited and condensed for clarity.

You talk in your book about the sense of liberation that a lot of people felt in the early days of the internet, individually and on a societal level. Is there a moment for you when you sensed that the internet had suddenly switched from being a democratizing, freeing place to one that was owned by someone else?

I fell into the internet — I call it falling in because it felt like that — in 1991. I’m not sure one would fall into the internet in the same way today. If your first interactions were with Instagram and Facebook, you really don’t get the same sense of discovery.

The moment where things start to shift is when we ended up thinking about commerce and convenience, rather than where the web started, which was criticality and collaboration. The internet wasn’t really convenient in 1994 or 1995, but it was a very collaborative space.

There was a moment where we replaced this idea of the internet being a medium that we can all write to and participate in to one that is mediated. That happened at some point after social networks started to arrive and when the smartphone started to arrive. It’s a combination of the nature of those platforms and the prevalence of the technologies, which meant the economic rewards of getting this right rose significantly.

And so there’s a really distinctly different feel in the 2013, or 2014, internet to the one that you might have had in 1997, or 1998. It’s not just that it’s easier and I’m yearning for a world of cars with manual choke and manual transmission and crank-up starter handles, but it’s that the programmability of the internet and its endpoints has turned into something that is increasingly permissioned by major platforms.

Alongside that, of course, has come the fact that we’ve brought the brilliance of this technology to billions of people. And I suppose the question is, could one get that brilliance and ubiquity without this power shift?

The regulation of large tech platforms is once again in the spotlight. You talk about the origins of platforms like Facebook in your book, and how these companies have grown from the frat house to becoming, effectively, infrastructure. They start out as systems to rate the attractiveness of women and end up caught in geopolitics and embroiled in political violence.

The internet wasn’t designed to breach national boundaries. In fact, it was designed in response to the U.S. Defense Department wanting tough networks that were resilient to a sovereign state attack. And so the internet spread without anyone really asking questions about national-level governance.

Virtually every computer that was connected to the internet that I’ve used in the last 30 years has been an American computer, sending packets over American routers. It’s only when Huawei shows up in the last 20 years that that starts to change. You hear this often that the internet was global and borderless. It was global and borderless in the sense that every company that made every product on it was American, and 90% of the people involved in the bottom-up governance of it were also American, and it came from that political culture.

We end up having to think quite hard about where do we draw the lines. Recently, the Russian government told Apple and Google that they’ve got to take down the Navalny app [a pro-democracy platform created by supporters of Russian opposition leader Alexey Navalny]. If we believe the Russian government is rightly constituted, and it’s a legitimate government of Russia, and if there’s no U.N. resolution, is the Russian state not on the same platform as, say, the Germans when it comes to removing Nazi material? We can say we feel uncomfortable with it, and maybe we do. But this is where we have a really hard question about the ideas of rights-based thinking and democratic values and the extent to which they should be embedded in these platforms, or not.

That is really about not just internet governance, because the questions about Apple and Google and their app stores is not really an internet governance question, it’s about the commercial activities of private companies.

So what is the solution?

I wish I had an easy answer other than to say that we’re going to have to establish a set of principles that stand above all national interest, and find places where that dialogue happens.

I suggest it should be in newer organizations, rather than going to the old ones. And, like it or not, we have to find some way of getting the technology companies in because they actually run the infrastructure and they have the capabilities. We’re going to have to get them to participate as their social license to operate.

Do you think that the platforms are actually equipped to make these kinds of governance decisions and to implement them?

I think there are two or three different questions. One is how do they make the governance decisions? The second is should they be making the governance decisions? And the third is do they have the resources and the capabilities?

How senior a group within Facebook or any of these platforms are running these governance aspects? How serious is it on the corporate dashboard? Do they record growth and engagement metrics and show that in real time? Or do they have murders committed by people using the platform? I do think they have the resources. I think that there are significant and deep resources available for implementation. Then the question is who should be actually making those decisions?

We can look at things like the financial services industry, which is a very regulated industry, where fines are a normal part of business, but it’s big and successful and profitable and largely works for us because our economies seem to work. Businesses get the capital they need and so on. But in the financial services industry, the governance decisions around what is appropriate behavior are not made by the company.

I think that’s a similar model to the one required for these big platform companies. They’re big enough to have compliance departments, and there’s more than enough profit in the industry for these companies to be contributing to funding the regulator and the rule setter.

Then the question is what are the mechanisms the regulator or the rule setter should use? These companies present transparency reports, and the transparency reports talk about how much content they’ve taken down. But they don’t give enough information in the transparency report to really help you diagnose what’s going on.

I talk about the idea of observability, a mechanism where we start to say that because what you do is so fast moving, and because what you do is so significant, potentially problematic, we need to be able to observe the process. So we can understand how your decisions get made. It’s a level beyond transparency, which is really a rearview mirror, and more of a real-time sense of what’s going on and how you are addressing this.