Images via Generated.Photos

A startup thinks it can solve diversity issues in stock imagery by replacing real people with algorithmically-generated portraits of people who don't exist.Generated Photos, a collection of 100,000 images of AI-generated faces, is owned by Icons8, a company that makes stock icons and illustrations for designers. According to the company, all of the portraits are free for anyone to use and accessible in a Google Drive link as long as users link back to generated.photos whenever the images are used.According to a blog post by Icons8, the company took the training images (AI generates new images after “learning” from a pool of “training” data) in-house using real models, and none of the training images were pulled from existing stock media or scraped from the internet without consent.The portraits Icons8 chose to feature on the Generated Photos website are weirdly poreless and stiff—the telltale signs of algorithmic generation. Still, they're no less stiff or weird than traditional stock imagery of, say, a woman eating salad and deliriously laughing.But many of the 100,000 images found within the database itself are truly fucked up. Some have random eye sockets on their foreheads, or chins that melt into the background. Engineers still haven't perfected a method of generating fake faces that escape the uncanny valley. If an algorithm doesn't have enough source images or time to train—or just because of the quirks in AI models—it can leave artifacts like a hole in the head. The available information on the Generated Photos website doesn't disclose the demographic makeup of the models whose images were used for training or any details on the dataset or system itself. Icons8 did not respond to a request for comment."So, the resource lets creators fill their creative projects with beautiful photos of people that will never take their time and money in real life," Icons8 wrote in the blog post. The company says that it's "creating a simple API that can produce infinite diversity."Its website previews a user interface that allows designers to filter the fake people into categories based on skin tone, ethnicity, age, and gender—alongside an image of a grinning blonde white woman."Generally, I raise my eyebrows at anything as 'infinite diversity,'" machine learning designer Caroline Sinders, a fellow with Mozilla Foundation and the Harvard Kennedy School who studies biases in AI systems, told Motherboard."Who is determining or defining that? Are their definition or parameters open to audit? Can they be changed? What is their process for ensuring infinite diversity?” she said “On [the] one hand, it is better to have a database full of images that are not real people—that doesn't violate anyone's consent. But what are those data sets used for? And who benefits from those systems? We need to ask that as well."

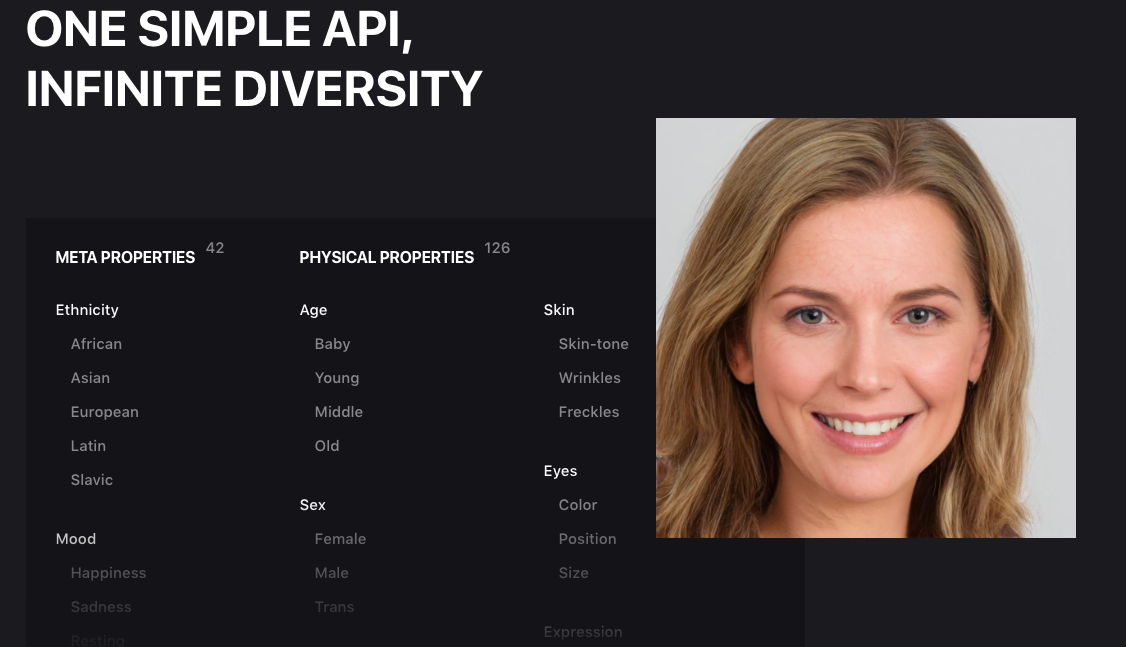

The available information on the Generated Photos website doesn't disclose the demographic makeup of the models whose images were used for training or any details on the dataset or system itself. Icons8 did not respond to a request for comment."So, the resource lets creators fill their creative projects with beautiful photos of people that will never take their time and money in real life," Icons8 wrote in the blog post. The company says that it's "creating a simple API that can produce infinite diversity."Its website previews a user interface that allows designers to filter the fake people into categories based on skin tone, ethnicity, age, and gender—alongside an image of a grinning blonde white woman."Generally, I raise my eyebrows at anything as 'infinite diversity,'" machine learning designer Caroline Sinders, a fellow with Mozilla Foundation and the Harvard Kennedy School who studies biases in AI systems, told Motherboard."Who is determining or defining that? Are their definition or parameters open to audit? Can they be changed? What is their process for ensuring infinite diversity?” she said “On [the] one hand, it is better to have a database full of images that are not real people—that doesn't violate anyone's consent. But what are those data sets used for? And who benefits from those systems? We need to ask that as well." Implying that a lack of diversity can be "fixed" with an algorithm is dubious. Machine learning models are the product of human labor, whether it's an engineer coding an algorithm or Mechanical Turk workers being paid literal pennies to identify objects that help train an AI. Their human biases and prejudices are often baked into their software.The AI research community is struggling with a lack of diversity from the people who makes these systems; Findings published by the AI Now Institute earlier this year said the machine learning industry is experiencing a "diversity disaster," with systems built mostly by men. As for how this has turned out so far, one example: In 2018, Amazon pulled the plug on its own hiring AI, after it found that the system was biased against female applicants.Computer-generated humans, and CGI influencers in particular, are having a moment—and are similarly under fire for attempting to achieve some idea of diversity while not actually engaging or compensating people of color. "Shudu Gram," a CGI model as seen on Fenty Beauty's Instagram, is the creation of a white man—and has been criticized for profiting off of her representation of diversity. He calls her his "expression of creativity."Another issue raised by the Generated Photos project is that of fake accounts on social media, and the ease with which people can make bot, spam, or catfishing profiles using algorithmically-generated images. Earlier this year, spies used AI portraits to make connections with high-powered government officials.Instead of “infinite diversity,” AI is giving us an infinitely expanding set of conundrums to navigate. But if you want to use diverse and ethically-made stock images, you can download them from a place like culturally-diverse stock image company TONL, or Vice's Gender Spectrum Collection image library.

Implying that a lack of diversity can be "fixed" with an algorithm is dubious. Machine learning models are the product of human labor, whether it's an engineer coding an algorithm or Mechanical Turk workers being paid literal pennies to identify objects that help train an AI. Their human biases and prejudices are often baked into their software.The AI research community is struggling with a lack of diversity from the people who makes these systems; Findings published by the AI Now Institute earlier this year said the machine learning industry is experiencing a "diversity disaster," with systems built mostly by men. As for how this has turned out so far, one example: In 2018, Amazon pulled the plug on its own hiring AI, after it found that the system was biased against female applicants.Computer-generated humans, and CGI influencers in particular, are having a moment—and are similarly under fire for attempting to achieve some idea of diversity while not actually engaging or compensating people of color. "Shudu Gram," a CGI model as seen on Fenty Beauty's Instagram, is the creation of a white man—and has been criticized for profiting off of her representation of diversity. He calls her his "expression of creativity."Another issue raised by the Generated Photos project is that of fake accounts on social media, and the ease with which people can make bot, spam, or catfishing profiles using algorithmically-generated images. Earlier this year, spies used AI portraits to make connections with high-powered government officials.Instead of “infinite diversity,” AI is giving us an infinitely expanding set of conundrums to navigate. But if you want to use diverse and ethically-made stock images, you can download them from a place like culturally-diverse stock image company TONL, or Vice's Gender Spectrum Collection image library.

Advertisement

Advertisement